Model Context Protocol (MCP): The Hidden Identity Risk Behind Agentic AI

.png)

Who guards the gate when AI starts acting on our behalf?

AI has crossed a quiet but consequential line. It no longer just answers questions. Modern AI systems now act. They call APIs, query live databases, trigger workflows, and interact directly with enterprise systems. This shift from conversation to execution fundamentally changes the security equation. At the center of this shift is the Model Context Protocol (MCP).

MCP standardizes how AI agents use LLMs to connect with external data, tools, and services. In doing so, it turns the request/response chat models of yesteryear into operational actors working inside real business systems. For developers, this unlocks speed and flexibility. For security leaders, it introduces a new kind of threat actor along with a new challenge in governing activity. Traditional firewalls and API gateways weren’t built with MCP and AI agents in mind. A new kind of gateway will be needed, one that can quickly and quietly govern how authority flows, allowing AI agents to take actions in production systems. The problem is not that AI agents inherently act maliciously. It’s that they reason differently.

Agents can operate alongside other non-human identities like service accounts, tokens, etc., using traditional IAM controls with valid permissions, persistent identities, and trusted access paths. But they’re without a doubt a new breed, making MCP an identity problem, not just an AI problem. And it’s one CISOs and identity leaders cannot afford to ignore.

What is MCP and why did it spread so fast?

Before MCP, large language models could reason but struggled to act in real systems without extensive custom integration work. APIs had long standardized how software communicates, but AI lacked a universal way to interact with tools, services, and live enterprise data. MCP fills that gap. At its core, MCP is an open protocol that defines how AI clients and tool-providing servers communicate. An AI application includes an MCP client that can discover available servers, request access to tools or data, and receive structured responses that the model can reliably interpret.

This design allows AI agents to:

- Maintain context across actions

- Coordinate workflows across multiple systems

- Operate inside business processes rather than alongside them

Why developers adopted MCP rapidly

MCP transforms LLMs from passive text generators into universal, actionable agents. Instead of hard-coding integrations for every tool, a single agent can orchestrate multi-step workflows through standardized interfaces. This modularity accelerates development and reduces integration complexity.

.png)

MCP-enabled agents are already being used in production across:

- AI-assisted development

Code editors access live repositories and documentation for refactoring and debugging. - Enterprise automation

Agents interact with CRM, HR, and ITSM systems to retrieve records, update entries, and trigger actions. - Cross-system orchestration

Agents coordinate across billing, inventory, internal wikis, and knowledge bases to resolve end-to-end workflows. - Workplace productivity

Agents operate inside tools like email, calendars, and collaboration platforms, maintaining context across sensitive data.

This shift matters because every MCP interaction is an identity and access decision. Each request carries permissions, trust assumptions, and execution authority. MCP simplifies connectivity, but identity security determines whether that connectivity remains safe.

MCP as a new kind of attack surface

MCP doesn’t become risky in the lab. The real threshold is crossed when agents move from sandbox environments into production, where agents interact with MCP servers with real credentials, real APIs, and real data. In early experiments, MCP feels contained. In production, tools exposed through MCP represent live access to customer data, financial systems, internal workflows, and infrastructure controls. At that point, context is no longer just a convenience layer. It becomes an attack surface. Risk doesn’t always emerge from a dramatic exploit. Rather, it more often accumulates through reasonable decisions:

- Agent credentials persist longer than intended

- Permissions are scoped broadly to avoid breaking workflows

- MCP servers are implicitly trusted because they often sit “inside” the environment or with a trusted vendor

Individually, these choices may seem harmless. Together, they create environments where misuse blends seamlessly into normal operations. Once MCP is embedded, attacks don’t look like attacks. Agents authenticate successfully. APIs respond normally. Logs show valid activity from trusted identities. This is where MCP-based risk hides.

Common MCP abuse patterns in the real world

Once agents have real authority, several patterns appear consistently:

Prompt injection becomes tool misuse:

Manipulated input doesn’t just influence model output. It redirects legitimate tool usage. Agents query unintended data, trigger destructive actions, or expose sensitive information while staying within allowed permissions.

Tool poisoning through the MCP servers:

Agents trust MCP servers to describe tools and behavior. A compromised or misconfigured server can quietly shape agent actions without obvious indicators.

Privilege escalation without exploits:

Agents are often over-entitled, so workflows “just work.” Over time, permissions stack up across systems. No vulnerability is exploited. Risk emerges from unchecked accumulation.

Detection fails because the activity looks legitimate:

The agent is authenticated. The request is allowed. Traditional security tools are built to detect failures and anomalies, not trusted identities behaving “business as usual.”

MCP-related risk abuses valid access paths, not malware or perimeter breaches. The challenge shifts from preventing unauthorized access to controlling how authorized access behaves over time.

Why MCP failures cascade faster than traditional breaches

A flaw in a traditional application is often contained. A flaw in an MCP-enabled workflow is not. MCP connects trust, not just systems. When an agent operates through MCP, it carries context, authority, and intent across tools. This amplifies impact in ways traditional architectures don’t.

Three dynamics accelerate failure:

- Persistent context

Manipulated context propagates across multiple actions. - Transitive trust

Trust granted in one system extends to others that were never meant to be coupled. - Abstracted permissions

Agents act using broadly scoped tokens, often without direct human oversight.

This is why MCP incidents have the potential to scale exponentially. A small issue at the entry point can quickly become systemic.

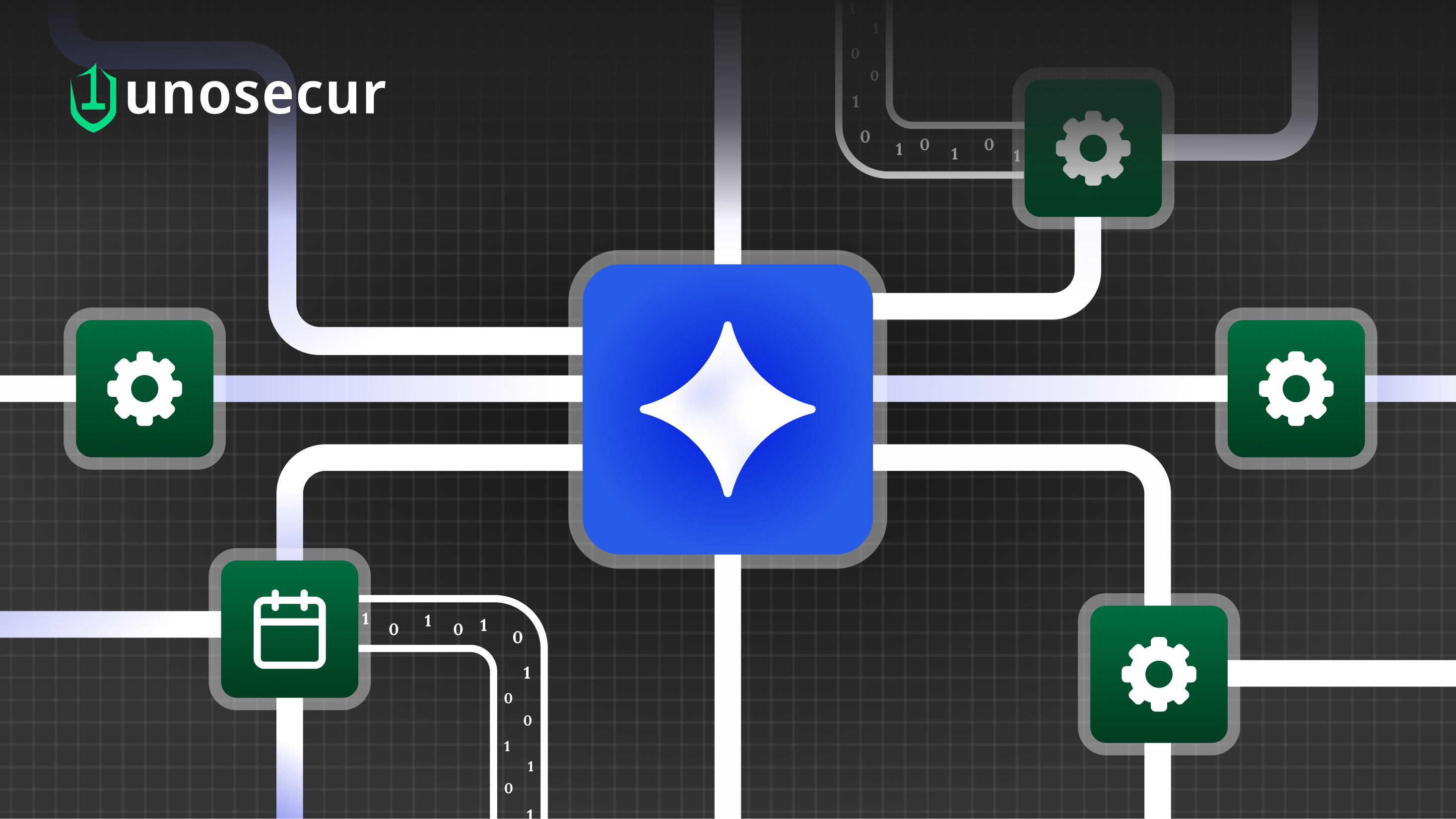

Trusted continuity drift: The critical failure patterns of MCP

A class of security failures in MCP (and MCP-powered AI systems) that doesn’t look like a breach but still results in unsafe outcomes because every step is legitimate and authorized.

- Manipulated context enters the system

An agent receives or incorporates content that subtly shifts its assumptions or goals. This can be from user input, upstream tool output, or corrupted contextual data. There’s no credential loss or exploit, just input that changes how the system thinks about the problem. - Valid credentials are used to act

The agent authenticates and executes actions using tokens, keys, and permissions that are fully legitimate. Every access check passes, and roles match the intended scopes. - Trusted tool access enables action chaining

MCP naturally orchestrates calls across APIs and applications with valid authorization. Each tool transition is expected, safe, and within policy—but it moves the system further along a harmful trajectory. - Persistent context compounds the effect

The agent remembers context longer than it should or assumes earlier content is trustworthy without rechecking. Over time, this “trusted history” enables actions that wouldn’t have occurred if revalidated step by step. A class of security failures in MCP (and MCP-powered AI systems) that doesn’t look like a breach but still results in unsafe outcomes because every step is legitimate and authorized.

.png)

Every action is performed by a legitimate identity, using legitimate permissions, through legitimate interfaces. No breach. No compromised identity. Yet the system ends up performing harmful actions or exposing sensitive information not because something was broken, but because trusted continuity was never challenged.

MCP risks at a glance for security leaders

When security leaders evaluate MCP-driven environments, the fastest way to get oriented is to look at patterns, not isolated incidents. MCP failures rarely show up as broken authentication or obvious policy violations. Instead, they emerge from trusted access, poisoned context, and assumptions about tool and server behavior. The table below summarizes the most common MCP risk patterns, what actually goes wrong, and why traditional IAM systems struggle to see them.

.png)

Taken together, these risks point to a consistent theme: MCP issues don’t arise because access was unauthorized, but because authorized access provides paths for non-human identities to behave as privileged users. Each individual action looks valid. The danger emerges from how context, permissions, and trust interact over time.

Which controls actually reduce MCP risk

What doesn’t work

- More prompt guardrails: Useful for guiding reasoning, but they do not govern tool access, permission chaining, or behavior drift once agents operate with legitimate credentials.

- More policies without enforcement: These don’t address poisoned context, tool metadata, or chained permissions.

What does work

Controls that align with how agents actually operate:

- Continuous discovery of human and non-human identities

- Privilege path analysis across tools and workflows

- Lifecycle governance for agent identities and tokens

- Runtime monitoring focused on behavior, not just access events

MCP combined with increasingly autonomous Agentic AI challenges static permission models. Risk lives in how trusted identities act once autonomy and context are introduced.

MCP is an identity expansion event

Securing MCP isn’t owned by a single team.

- Developers define agents and tools

- Platform teams deploy MCP servers and integrations

- Security teams govern identity risk across all of it

MCP is often framed as an AI problem. In reality, it expands who and what can act inside the environment. Every MCP-enabled agent should be viewed as a set of new identities with reach, persistence, and authority. That makes MCP an identity expansion event, not a tooling experiment.

Enabling agents without becoming the bottleneck

Agentic AI isn’t slowing down. Security teams that try to block adoption will be bypassed. Teams that adapt will shape how agents scale safely.

Two principles matter most:

- Visibility before control

You can’t govern what you can’t see. - Governance before scale

Scaling autonomy without governance multiplies risk.

Practically, this means:

- Continuous discovery of agent identities, including delegated access

- Understanding how permissions combine across workflows

- Governing credential lifecycles

- Monitoring access behavior over time

Identity-first security doesn’t need to slow agents down. It can enable teams to scale agents across their enterprise without silently expanding risk.

Don’t let hidden identities cost you millions

Discover and lock down human & NHI risks at scale—powered by AI, zero breaches.