The Ultimate Guide to AI Agent Lifecycle Security: From Provisioning to Decommissioning

AI agents, autonomous software entities capable of performing tasks, making decisions, and interacting with systems, are rapidly being adopted across enterprises to automate processes, improve productivity, and enhance service delivery. However, with this rise comes a new cybersecurity imperative: securing the lifecycle of AI agents as first-class digital identities through structured agent lifecycle management. Without proper controls from creation to decommissioning, these agents can become blind spots that expose sensitive data, systems, or workflows.

As organizations deploy more autonomous systems, the need for consistent, auditable, and governed controls across the entire agent lifecycle becomes critical.

What Is an AI Agent? Identity & Lifecycle Defined

An AI agent is a software entity designed to act autonomously toward specific goals, such as scheduling tasks, analyzing data, or executing workflows. Unlike traditional scripts or machine accounts, AI agents make decisions and interact dynamically with systems, APIs, and data sources.

To operate securely, AI agents require identity artifacts such as credentials, tokens, and certificates, along with structured governance throughout their lifetime. This lifecycle spans onboarding and provisioning, authentication, access enforcement, monitoring, optimization, and eventual retirement. Effective agent lifecycle management ensures that AI agents remain visible, accountable, and secure within enterprise environments.

Why Traditional IAM Falls Short

Traditional IAM (Identity and Access Management) systems were built primarily for human users and predictable machine identities such as service accounts. While effective for static identities, these systems lack the granularity and adaptability required to secure autonomous agents that can:

- Act independently without direct human mediation

- Generate or manage their own access artifacts

- Persist across multiple systems and interactions

Because of these limitations, classic IAM controls often fail to capture the full scope of agent activity or to enforce appropriate lifecycle controls for autonomous, non-human identities. This gap increases the risk of unmanaged access, over-privileged agents, and security blind spots.

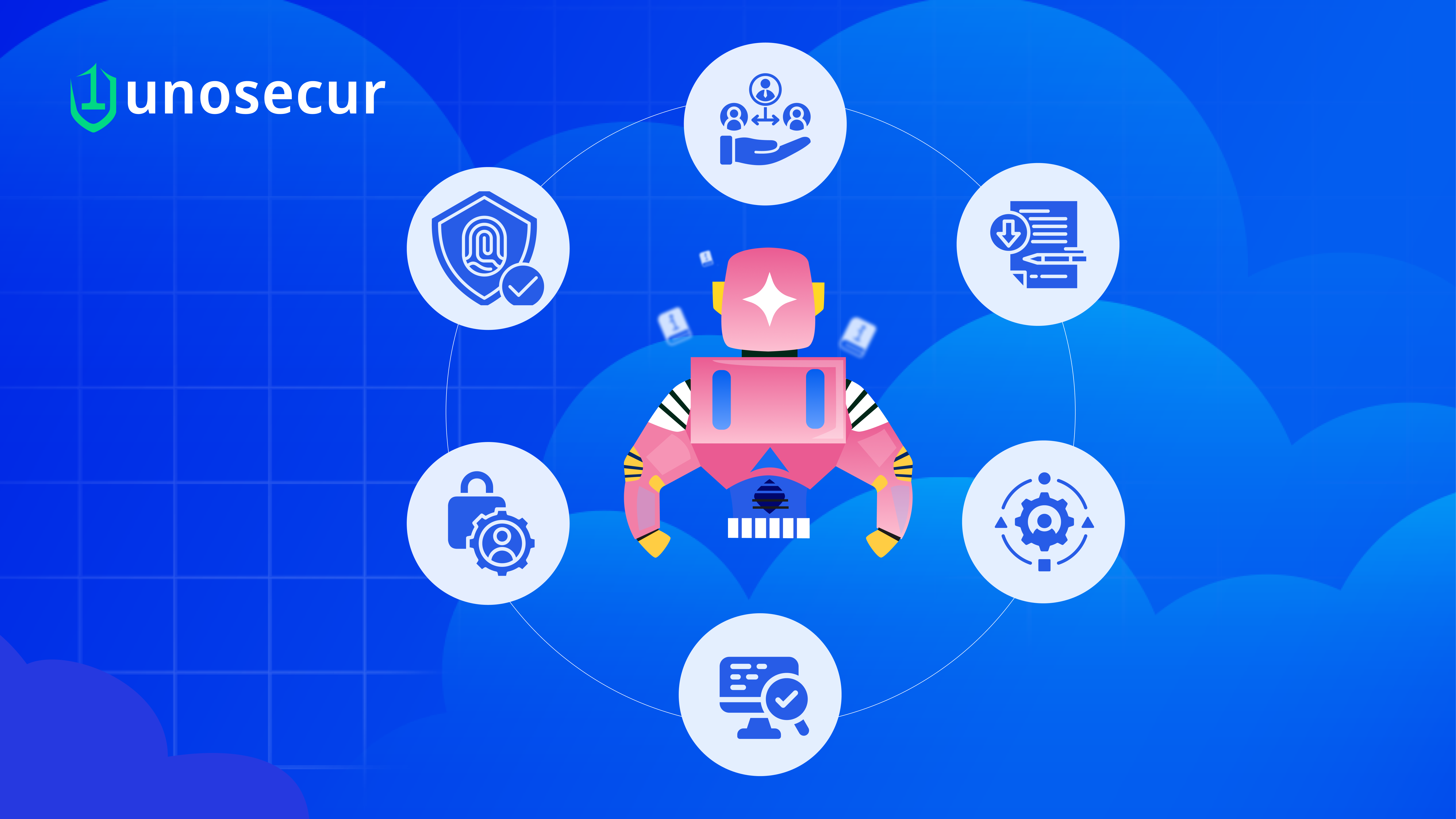

AI Agent Lifecycle Stages

AI agent lifecycle security encompasses a sequence of stages enforced through creation-to-decommissioning controls. Each stage requires targeted security measures and governance to ensure agents remain compliant, secure, and aligned with their intended purpose throughout their operational lifespan.

Provisioning & Onboarding

Agent provisioning & de-provisioning are foundational lifecycle stages that define how AI agents are created, governed, and eventually retired.

During provisioning, an AI agent is:

- Assigned a unique identity in an enterprise identity system

- Registered in an AI agent identity registry, a centralized catalog of all active agent identities

- Granted scoped permissions based on least-privilege principles

- Linked to a designated human owner responsible for governance and oversight

Automating provisioning workflows and maintaining an accurate AI agent identity registry helps eliminate shadow agents. These undocumented or unmanaged agents pose significant security risks due to unknown access paths and unclear ownership.

Authentication & Credentialing

Secure authentication ensures that each AI agent proves its identity every time it interacts with systems or services. Best practices include:

- Issuing short-lived credentials such as OAuth tokens instead of long-lived static keys

- Using cryptographic identities, including certificates or token binding, to prevent credential misuse

- Federating identity across systems to maintain consistent authentication policies

Strong authentication and credential management reduce the risk of credential exposure while ensuring every authentication event remains auditable.

Access Control

Once authenticated, AI agents must operate under strict access controls tailored to their specific function and risk profile. Effective access control includes:

- Role-based or policy-driven permissions aligned with the agent’s purpose

- Dynamic, context-aware controls that adapt to environmental or risk signals

- Least-privilege access limited to required resources and actions

Policy-based provisioning and continuously evaluated access limits help prevent privilege escalation and misuse of entitlements.

Monitoring & Audit

Continuous monitoring and audit trails for AI agents are essential for verifying behavior, detecting anomalies, and supporting security operations.

Organizations should:

- Track every action taken by agents, including API calls and resource access

- Correlate agent activity across systems to identify suspicious patterns

- Maintain immutable audit logs to support investigations and compliance

Comprehensive audit trails for AI agents, covering authentication events, access decisions, and operational outcomes, provide accountability, transparency, and forensic readiness.

Adaptation & Optimization

AI agents and their operating environments are not static. Ongoing agent lifecycle management must include:

- Re-evaluating permissions as business requirements evolve

- Adjusting governance policies when agent roles or behaviors change

- Retraining or updating models to maintain alignment with security objectives

Continuous adaptation ensures AI agents remain both effective and secure throughout their operational life.

Decommissioning

Decommissioning is the final and equally critical lifecycle stage within creation-to-decommissioning controls.

When an AI agent is retired, organizations must:

- Revoke credentials and tokens immediately

- Remove the agent from identity registries and governance workflows

- Retain relevant audit data for compliance, reporting, and investigation

Failing to properly decommission agents results in ghost agents, dormant identities that retain access and increase the attack surface. AI agents are one class of non-human identity, and these lifecycle controls extend across the broader NHI ecosystem. A more comprehensive NHI guide from Unosecur is coming soon.

AI agents introduce unique security risks across every stage of their lifecycle:

- Shadow Agents: Created without proper governance oversight.

- Credential Exposure: Caused by stale or unmanaged tokens.

- Over-privileged Access: Can lead to unintended data leakage.

- Autonomous Decisions: May bypass traditional policy enforcement mechanisms.

Without strong agent lifecycle management, these risks can quickly escalate into serious security incidents or compliance failures.

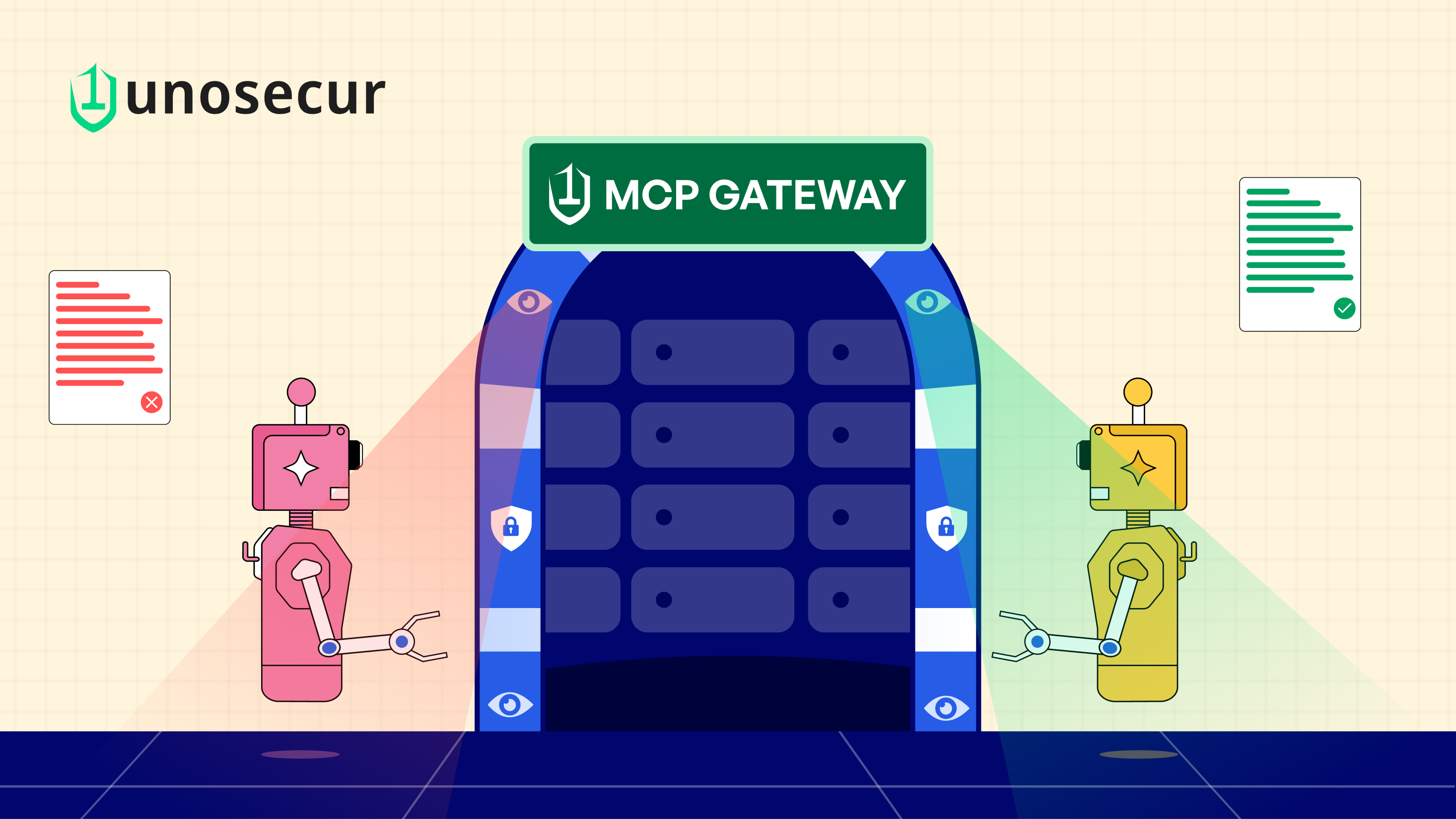

Lifecycle Security Through an IAM Lens

Modern IAM for agents includes identity federation, dynamic credential management (issuance and rotation), and tight integration with data governance and risk controls; the approach relies on automated access reviews and attestation workflows for ongoing permission management, ensuring this IAM-centric model provides consistent visibility, enforcement, and accountability for AI agents at scale.

Governance & Compliance Implications

- Enforce Ownership and Approval: Establish governance frameworks with clear ownership, approval workflows, and regular review/certification of agent permissions.

- Ensure Regulatory Alignment: Maintain alignment with data privacy, security, and industry regulations throughout the AI agent lifecycle.

- Maintain Audit Trails: Create and sustain reliable audit trails for all AI agents to demonstrate compliance, especially where transparency and traceability are mandatory.

Robust AI governance requires clear frameworks for agent ownership, approval processes, and continuous permission review to ensure compliance with data privacy, security, and sector-specific regulations. Non-negotiable, reliable audit trails for all AI agent activity are crucial for demonstrable compliance, transparency, and traceability, especially in regulated industries.

Best Practices for Secure Lifecycle Management

To secure AI agents end-to-end, organizations should:

- Treat agents as first-class identities within IAM systems

- Automate agent provisioning & de-provisioning using policy-driven workflows

- Issue short-lived credentials and enforce least-privilege access

- Collect and retain detailed audit data for every lifecycle action

- Continuously monitor agent behavior and enforce analytics-driven controls

These best practices reduce risk while enabling scalable and secure adoption of AI agents.

Conclusion & Next Steps

AI agents will continue to transform enterprise automation, but their autonomy introduces new identity and security challenges. By implementing strong agent lifecycle management, enforcing creation-to-decommissioning controls, and maintaining reliable audit trails for AI agents, organizations can reduce risk while unlocking the full value of agentic systems.

Whether you are just beginning to deploy AI agents or already operating them at scale, adopting a structured lifecycle security program is essential to protect systems, data, and strategic outcomes.

Don’t let hidden identities cost you millions

Discover and lock down human & NHI risks at scale—powered by AI, zero breaches.